Explained: Why this mathematician thinks OpenAI isn’t acing the International Mathematical Olympiad — and might be ‘cheating’ to win gold

TL;DR

- AI isn’t taking the real test: GPT models “solving” International Math

Olympiad (IMO) problems are often operating under very different conditions—rewrites, retries, human edits. - Tao’s warning: Fields Medalist

Terence Tao says comparing these AI outputs to real IMO scores is misleading because the rules are entirely different. - Behind the curtain: Teams often cherry-pick successes, rewrite problems, and discard failures before showing the best output. It’s not cheating, but it’s not fair play: The AI isn’t sitting in silence under timed pressure—it’s basically Iron Man in a school exam hall.

- Main takeaway: Don’t mistake polished AI outputs under ideal lab conditions for human-level reasoning under Olympiad pressure.

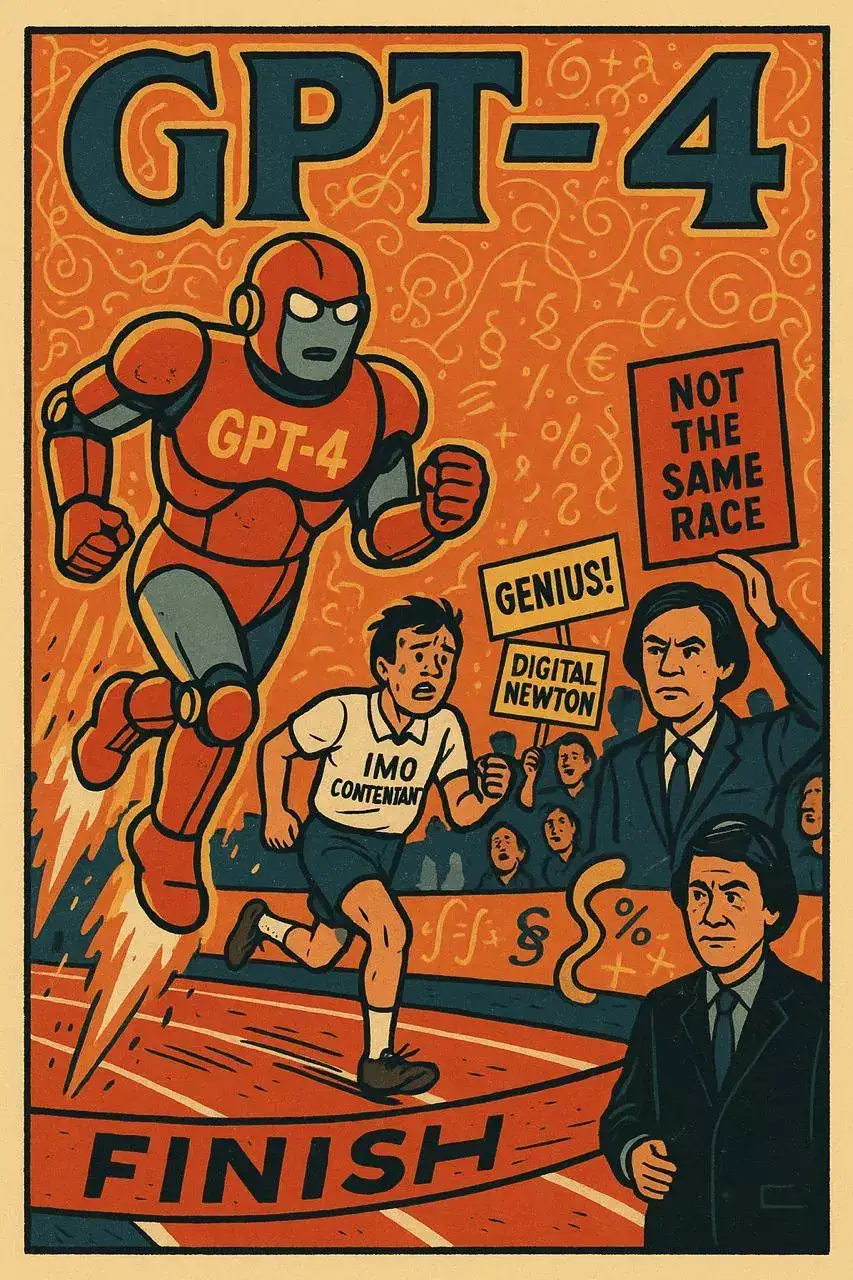

Led Zeppelin once sang, “There’s a lady who’s sure all that glitters is gold.”But in the age of artificial intelligence, even the shimmer of mathematical brilliance needs closer scrutiny. These days, social media lights up every time a language model like GPT-4 is said to have solved a problem from the International Mathematical Olympiad (IMO) — a competition so elite it makes Ivy League entrance exams look like warm-up puzzles.“This AI solved an IMO question!”“Superintelligence is here!”“We’re witnessing the birth of a digital Newton!”Or so the chorus goes.But one of the greatest living mathematicians isn’t singing along. Terence Tao, a Fields Medal–winning professor at UCLA, has waded into the hype with a calm, clinical reminder: AI models aren’t playing by the same rules. And if the rules aren’t the same, the gold medal doesn’t mean the same thing.

The Setup: What the IMO Actually Demands

The International Mathematical Olympiad is the Olympics of high school math. Students from around the world train for years to face six unspeakably hard problems over two days. They get 4.5 hours per day, no calculators, no internet, no collaboration — just a pen, a problem, and their own mind.Solving even one problem in full is an achievement. Getting five perfect scores earns you gold. Solve all six and you enter the realm of myth — which, incidentally, is where Tao himself resides. He won a gold medal in the IMO at age 13.So when an AI is said to “solve” an IMO question, it’s important to ask: under what conditions?

Enter Tao: The IMO, Rewritten (Literally)

In a detailed Mastodon post, Tao explains that many AI demonstrations that showcase Olympiad-level problem solving do so under dramatically altered conditions. He outlines a scenario that mirrors what’s actually happening behind the scenes:“The team leader… gives them days instead of hours to solve a question, lets them rewrite the question in a more convenient formulation, allows calculators and internet searches, gives hints, lets all six team members work together, and then only submits the best of the six solutions… quietly withdrawing from problems that none of the team members manage to solve.”

In other words: cherry-picking, rewording, retries, collaboration, and silence around failure.It’s not quite cheating — but it’s not the IMO either. It’s an AI-friendly reconstruction of the Olympiad, where the scoreboard is controlled by the people training the system.

From Bronze to Gold (If You Rewrite the Test)

Tao’s criticism isn’t just about fairness — it’s about what we’re really evaluating.He writes,“A student who might not even earn a bronze medal under the standard IMO rules could earn a ‘gold medal’ under these alternate rules, not because their intrinsic ability has improved, but because the rules have changed.”This is the crux. AI isn’t solving problems like a student. It’s performing in a lab, with handlers, retries, and tools. What looks like genius is often a heavily scaffolded pipeline of failed attempts, reruns, and prompt rewrites. The only thing the public sees is the polished output.Tao doesn’t deny that AI has made remarkable progress. But he warns against blurring the lines between performance under ideal conditions and human-level problem-solving in strict, unforgiving settings.

Apples to Oranges — and Cyborg Oranges

Tao is careful not to throw cold water on AI research. But he urges a reality check.“One should be wary of making apples-to-apples comparisons between the performance of various AI models (or between such models and the human contestants) unless one is confident that they were subject to the same set of rules.”A tweet that says “GPT-4 solved this problem” often omits what really happened:– Was the prompt rewritten ten times?– Did the model try and fail repeatedly?– Were the failures silently discarded?– Was the answer chosen and edited by a human?Compare that to a teenager in an exam hall, sweating out one solution in 4.5 hours with no safety net. The playing field isn’t level — it’s two entirely different games.

The Bottom Line

Terence Tao doesn’t claim that AI is incapable of mathematical insight. What he insists on is clarity of conditions. If AI wants to claim a gold medal, it should sit the same exam, with the same constraints, and the same risks of failure.Right now, it’s as if Iron Man entered a sprint race, flew across the finish line, and people started asking if he’s the next Usain Bolt.The AI didn’t cheat. But someone forgot to mention it wasn’t really racing.And so we return to that Led Zeppelin lyric: “There’s a lady who’s sure all that glitters is gold.” In 2025, that lady might be your algorithmic feed. And that gold? It’s probably just polished scaffolding.

FAQ: AI, the IMO, and Terence Tao’s Critique

Q1: What is the International Mathematical Olympiad (IMO)?It’s the world’s toughest math competition for high schoolers, with six extremely challenging problems solved over two 4.5-hour sessions—no internet, no calculators, no teamwork.Q2: What’s the controversy with AI and IMO questions?AI models like GPT-4 are shown to “solve” IMO problems, but they do so with major help: problem rewrites, unlimited retries, internet access, collaboration, and selective publishing of only successful attempts.Q3: Who raised concerns about this?Terence Tao, one of the greatest mathematicians alive and an IMO gold medalist himself, called out this discrepancy in a Mastodon post.Q4: Is this AI cheating?Not exactly. But Tao argues that changing the rules makes it a different contest altogether—comparing lab-optimised AI to real students is unfair and misleading.Q5: What’s Tao’s main point?He urges clarity. If we’re going to say AI “solved” a problem, we must also disclose the conditions—otherwise, it’s like comparing a cyborg sprinter to a high school track star and pretending they’re equals.Q6: Does Tao oppose AI?No. He recognises AI’s impressive progress in math, but wants honesty about what it means—and doesn’t mean—for genuine problem-solving ability.Q7: What should change?If AI is to be judged against human benchmarks like the IMO, it must be subjected to the same constraints: time limits, no edits, no retries, no external tools.Tao’s verdict? If you want to claim gold, don’t fly across the finish line in an Iron Man suit and pretend you ran.